AI’s Potential, Pitfalls, Policy, and Promise: A Conversation with Futurist and Strategist Olaf Groth

Author: Olaf Groth

Founder, Professor, Author, Geostrategist helping leaders shape the future disrupted by AI, data & emerging tech amid geopolitics

No longer a distant frontier, the AI-driven shift will undoubtedly continue to redefine global economies, industries, and institutions, a transformation that calls for strategic leadership of executives and policymakers. In the coming years, it is imperative to design systems that harmonize AI capabilities with human judgement and champion ethical AI governance at this critical intersection of technology, global economics, and technology.

Dr. Olaf Groth, founding CEO of Cambrian Futures and professional faculty member at UC Berkeley Haas School of Business, has spent his career at the forefront of the conversations around global AI strategy. With 25 years of experience working in corporate, academia, and consulting, Groth brings a global lens to understanding the transformation of economies, industries, and organizations driven by AI, data, compute and cyber at the center of geopolitics and geostrategic competition.

In this interview, Groth shares his perspectives on what excites him most, where today’s leaders miss the mark, the future of AI governance, and how leaders can strategically position themselves for success in the AI era.

A Bright Horizon

The promise of AI lies in its ability to fuel innovation and disruption across numerous domains. In particular, AI capabilities have the potential to relieve friction and business processes that unlock customer value, whether internally or externally. Not only would shareholders and employees alike benefit from AI implementation but the reduction in waste improves efficiency.

Groth believes that AI advancements in understanding customer needs will give rise to entirely new business models and service propositions, as well as determining where and for whom they create the greatest value.

Furthermore, the emergence of AI-first solutions are also introducing new ways for businesses to create value. AI-first solutions embed AI and data-driven decision-making into the fabric of the business.

“[AI-first solutions] are exciting because they offer ways to include a huge myriad and diversity of data streams, which lead to new types of narratives, new types of service offerings that haven’t existed before, simply because AI can do certain things that humans can’t.”

Industries across the board will be revolutionized by AI. One industry where Groth is particularly excited about AI’s transformation is healthcare, especially in the chemistry research that underpins the pharmaceutical world. In the physical domain, wherever AI is being used to manage, control or monitor physical systems—robotics, passenger drones, the space industry and exploration, and agriculture—AI can help us understand nature more profoundly.

Navigating the Risks of AI

With any transformation comes inevitable risks and concerns, and the noise amid the AI hype is no exception. Groth recognizes three central issues that executives and organizations are encountering when implementing AI today, as well as what leaders commonly overestimate and underestimate about AI’s current capabilities.

Where’s the ROI?

In the near term, he recognized that the return on investment is not as uniform as it needs to be to justify the initial investment. Groth emphasized that this shortcoming is primarily an organizational design issue, not a direct reflection of the AI’s capabilities. This should not come as a surprise, as generative AI entered the mainstream relatively recently, having emerged only around three years ago.

“It’s natural that we’re still experimenting with AI use cases, the value they add, and how to measure that value. However, the fact is, we’re spending more money on AI than almost anything else right now.”

The lopsided capital investment in AI may be fueling an unsustainable frenzy, requiring investors to demand proof of tangible progress, rather than waiting years into the future for consistent and measurable returns. Despite this pressure, experimentation is critical, and companies must think about the bigger picture in terms of how to understand the ROI of AI investments and proactively establish initiatives to leverage AI in their businesses.

“Companies should still have sandboxes in which you experiment, but they must be designed in very deliberate ways so you understand exactly what you’re experimenting with. Ask, ‘What are the assumptions that are no longer whole? What are the insights that you find as it relates to value add, whether that’s internally in the organization or pertaining to the customer endpoints?”

AI and the Future of Work

“We’ve got this horrendous narrative out there that associates AI with FTE reduction.”

Framing AI adoption simply as a cost-cutting, efficiency optimizing measure, either through reducing headcount or working hours, forms an incomplete and harmful narrative.

However, Groth acknowledged that a fair number of jobs might be lost, especially in heavily quantitative-analytical areas, and that there may be a “hockeystick” effect at some point as the technology gets better and better. And the job prospects of recent college graduates are most at risk. Entry-level tasks that serve as a gateway to more strategic roles are easily automated by AI, limiting opportunities to evolve in the workplace.

“I think the generation just out of college will be the first ones to feel it because they are inexperienced and haven’t yet matured into higher value-add roles. So they might get cut off at the knees, as it were. We have to be very concerned—a young person needs a chance to learn and grow.”

Furthermore, in a risky phase of the AI hype, companies are emphasizing cutting costs as the central payoff. Investors, driven by financial pressures, aim to maximize value at the lowest possible price, only reinforcing this economically appealing but short-sighted belief.

From a change management perspective, the narrative that AI adoption is equated with FTE reduction is incredibly detrimental to organization and employee morale.

“It’s demotivating employees, filling people with fear and anxiety.”

Groth believes that we may be getting ahead of ourselves. Rather than create hysteria around job loss due to AI, companies should instead redesign jobs for human-AI synergy.

“If we were to design jobs that integrate humans and AI, enabling people to play at a much higher plane of performance, then keeping the human in the picture is actually very justified from a financial standpoint. We should lead with that, rather than with FTE reduction. If we don’t then we shouldn’t be surprised when people become demotivated and don’t want to be transparent or experiment with AI.”

Deploying AI Strategically

As AI becomes a foundational, operational layer in organizations, it is imperative to be strategic about how companies deploy these technologies. Groth noted that simply saying, “We’ll deploy it like the internet, and sprinkle it across everything,” is a mindset much too expensive and misleading for business executives to adopt.

“We have to be very deliberate about where we embed AI.”

Ultimately, the intentional AI deployment is what will distinguish the organizations that secure a competitive advantage from those with weaker strategic direction.

Considerations for Today’s Business Leaders

As businesses continue to experiment with AI today, they commonly overestimate its near-term benefits and underestimate its long-term potential.

What We’re Getting Wrong Today

In particular, Groth emphasizes one common misconception executives hold about AI capabilities: the disconnect between the theoretical appeal and realistic payoffs.

“It’s very normal in the executive life, and life in general, to see a shiny new thing and automatically think it is powerful. When you play with it, it intuitively makes sense how it makes your life easier, and yet that doesn’t always translate into economics.”

This perspective creates a huge fallacy with significant implications for businesses. Executives cannot assume that simply purchasing large amounts of software licenses from providers will inherently boost productivity; this view is too simplistic. Rather, increased productivity is a result of deliberate AI integration with company data and workflows.

“You only get a tight fit between AI implementation and payoff if you enable the AI with your own enterprise data. AI deployment ultimately becomes a much more prosaic issue of making sure that you have the right data, and that it is clean and accessible, not corrupted or biased.”

“This is the foundation of being able to harness the greatest value because the algorithms are best when they are let loose on proprietary data, so they can customize solutions for your enterprise.”

What We’re Overlooking Today

Though business leaders tend to overestimate AI’s transformative abilities in the short-term, they also underestimate the scope of the long-term structural shifts driven by these technologies, especially as computer power continues to progress at an astounding pace. Groth believes that a wave of AI-first solutions and organizations is not far.

“When you’re looking at the confluence of AI and something like blockchain, which underlies crypto, and you layer AI agents on top at some point—whether that’s three, five, or seven years out—you will empower an agent-enabled economy. That may sound like science fiction right now, but it could happen.”

Groth alludes to the emergence of the Agentic Twin economy as a plausible horizon that business leaders and policymakers must prepare for. In the future, agentic twins will fuse with digital twins, capable of autonomous decision-making and operating as economic actors guided by human or organizational characters. To ensure responsible and prudent use, rigorous trust architectures must be carefully designed to both align with “human values and institutional norms” without stifling innovation.

Future-Proofing Tomorrow’s Talent

“To state the obvious, everybody under the sun needs to study AI and data science, not necessarily to become technically proficient, but to become proficient with tools in the application layer.”

Groth asserts that everyone must take advantage of any opportunity to “get up to speed” on AI applications in order to effectively complement their own skills with it, no matter what their primary domain expertise may be.

“Anybody who has expertise of a certain kind, whether you’re a literature major or marine biology, needs to become proficient in how to use AI tools.”

As for engineers and technically proficient individuals, Groth urges them to develop a strong understanding of the broader industry landscape in which their technical expertise will be applied.

“The highest value is achieved when you pair AI with the judgement that comes with being competent in a domain. That doesn’t necessarily mean becoming a narrow expert in a field, but it does mean developing the judgement capabilities to determine not just what AI can do, but on the actual needs in the domain. It’s really hard to tweak and customize an algorithm if you don’t understand the subject matter that the AI is supposed to be deployed into.”

Real value creation is a result of synthesizing AI and data capabilities with human judgement rooted in domain knowledge. While Groth advocates for mastery of AI application use, he warns that leaders must not “over-index on data skills alone” to avoid losing the business judgement that allows AI tools to be applied meaningfully. Executives who maintain this balance will be well-positioned to guide their organizations through complexity.

Toward Ethical and Responsible AI

From the organizational level to the national and international levels, leaders must design frameworks that ensure ethically and socially responsible governance and regulation over AI systems. Groth points out that social justice and national security are contingent on the soundness of the other.

In other words, these issues are fundamentally two sides of the same coin, and they require robust governance over AI and data, regardless of current political agendas and conviction.

“If you marginalize the dignity of certain populations and minority groups, we open ourselves to huge vulnerabilities from a security angle.”

“Whether you’re coming at it from a security angle or not, we all share the same interests, perhaps for different reasons. We need to have proper governance, which entails avoiding being corrupted, biased, or accessed by the wrong people, for the wrong reasons, and on the wrong terms.”

The Layers of AI governance

Groth outlines five layers of AI governance, noting that this oversight must operate in hierarchical layers: top-down corporate leadership, algorithm audits, data governance, and testing models in deployment, and at the international level.

First, prudent management in corporations must be enforced, from the supervisory board and C-suite to the most junior person in the company. It is vital that everyone at the company must abide by appropriate guardrails for a governance regime with incentives—rewards for those particularly compliant and punishments for those who violate the protocols—to institutionalize a more transparent and principled culture around AI.

Second, algorithms must be carefully audited for their functionality, effectiveness, and security and privacy related risks, a task already performed by many hyperscalars, to avoid exposing personally identifiable information in a model.

Similarly, the integrity of data itself must be confirmed before it is fed into the model. It must be “clean, appropriate, uncorrupt, and rightfully owned.”

Fourth, these AI models must be tested responsibly and lawfully before deploying them into different target segments and communities. Testing doesn’t have to be incredibly time- and resource-intensive.

AI Governance Across Borders

Finally, comprehensive AI governance demands international cooperation to bridge the distinct data spheres emerging in China, the United States, and Europe. The establishment of a global accord should address the evolution of these data spheres and create clarity around how we respect and trade data. So far, Groth believes that encouraging first steps are already underway, including existing cooperation through the Digital Economy Partnership Agreement, G7, and Organization for Economic Co-operation and Development. However, the establishment of a global leadership organization is key.

“While [these efforts] haven’t yet bubbled up in a global organization, you can see that different regions are starting to congeal around different types of organizations that attempt to gain some level of air traffic control around what’s happening with AI.”

Furthermore, Groth maintains that these governance systems must be interoperable, meaning that the rules and standard of each regime must be compatible across different regimes. While the tools for governance exist—including geopolitical firewalls, data centers, and digital forensics—we have yet to design and implement a functional global system.

“It’s going to be a long, hideous negotiation because data and Ai are so intertwined with economic competitiveness and national security, which are highly sensitive and hard to disentangle. Lots of powerful interests get caught up in these issues, but over time, we will get there—the stakes for catastrophic failures on everybody’s part are way too high.”

Groth’s Pearls of Wisdom

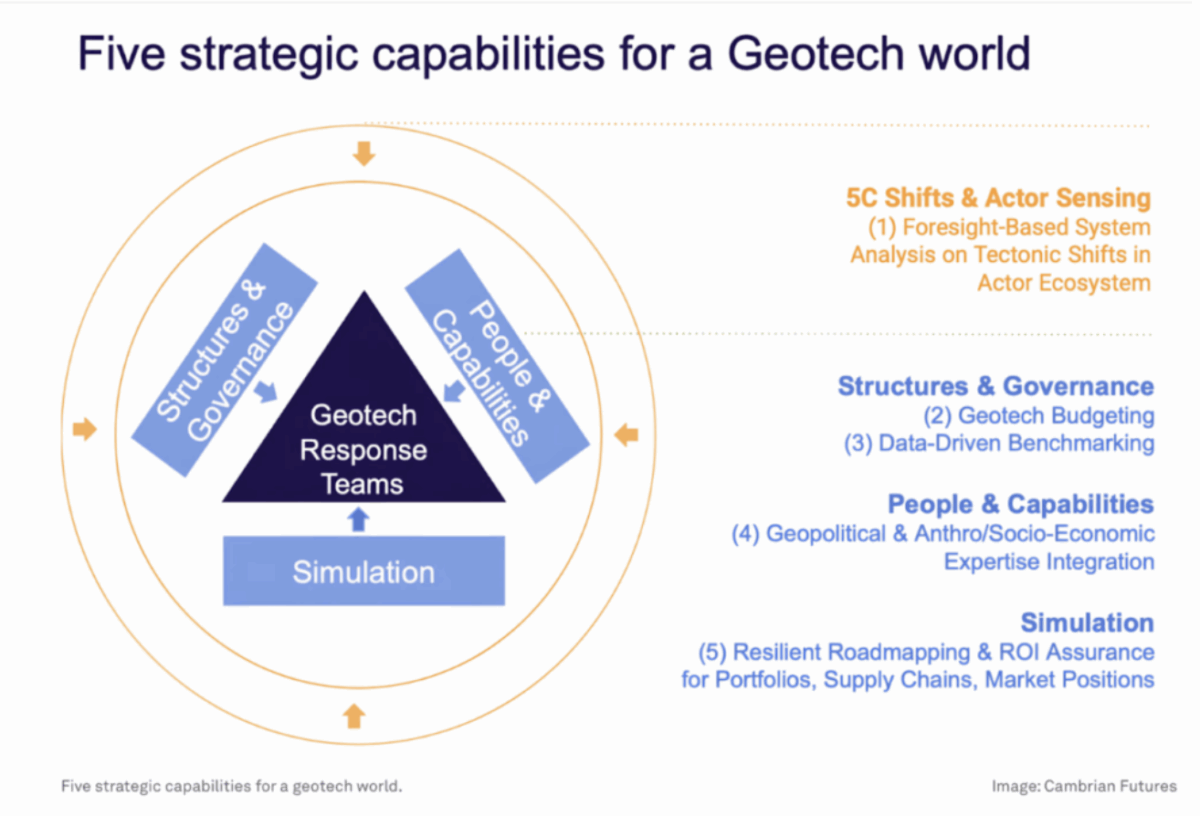

Technology at the forefront of modern geopolitics and geoeconomics, a fact that will hold true for the next few decades. AI innovation has become inextricably linked to national security and power dynamics; this simply cannot ignore this new truth. More than ever before, political and societal forces will determine which technology innovations succeed and fail, and business executives must extend their scope beyond watching traditional industry actors. Those who are blindsided will struggle to “see how the pieces connect” and anticipate future shifts. Groth shares two pieces of advice for aspiring AI leaders.

When asked to offer advice to aspiring AI entrepreneurs and business executives, Groth stated, “Don’t be the flavor of the month,” encouraging leaders to avoid a “DeepSeek moment” by innovating just over the horizon.

To buffer against political and social obstacles, entrepreneurs and executives alike must embrace systems thinking to equip their innovations with GeoTech Capabilities, requiring a “360 degree surround vision” —a deep situational awareness—of societal and political actors and their agendas. Those who develop this foresight will gain the advantage of spotting risks and opportunities earlier.

“You can no longer be narrow, focused on only one solution or one customer. You have to develop a radar.”

Author: Olaf Groth

Founder, Professor, Author, Geostrategist helping leaders shape the future disrupted by AI, data & emerging tech amid geopolitics